How is High Quality World AR Is Getting Closer?

Overlaying a digital world right on top of our physical one has historically been difficult due to GPS inaccuracy and localization.

An intro to Augmented Reality

Broadly speaking, there are two types of augmented reality (AR) created and subsequently used by everyday consumers.

The first type is AR which detects objects in your vicinity to spawn digital objects/world from. This can be anything from plane detection to image targets, where you’ve set parameters on how game objects can be spawned after detection criteria are met.

Most popularly, this first type of AR uses facial recognition to give you the multitude of filters you’ve seen on Snapchat, Instagram, and even Zoom. Spark AR is a great library for playing around with this using your PC/Mac camera.

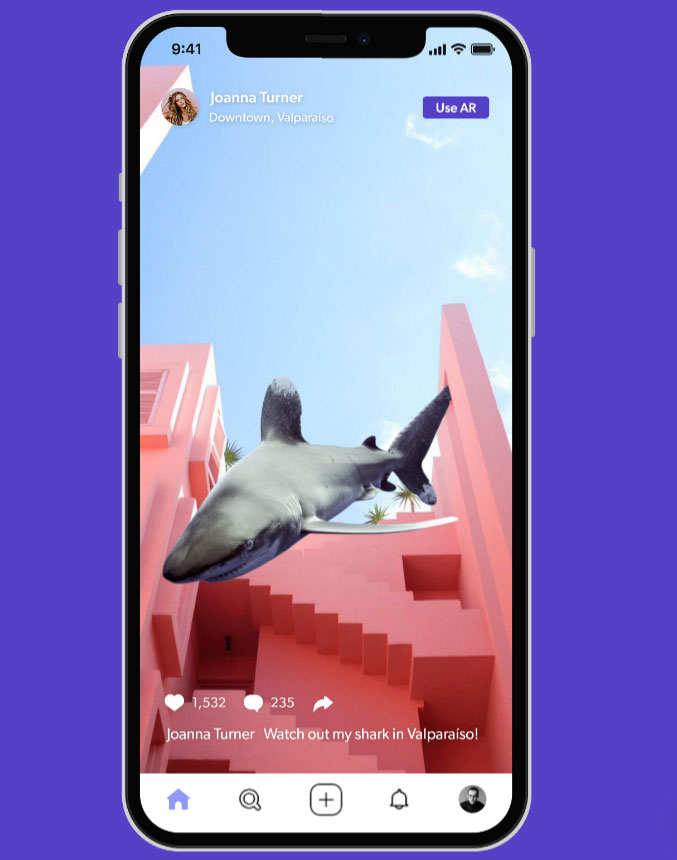

The second form of AR detects your physical location and overlays a scaled, pre-built digital world on top of it. This is commonly referenced as world-scale AR. The best immediate example of this is Pokemon GO, where special events, pokestops, and gyms will show up at specific geographic coordinates. You go there, pull up first-person POV, then do your thing. But plans for world-scale AR are not limited to gaming, they include use cases like indoor navigation and the holy grail of multiplayer, single-session AR events.

Test footage for Neon, an unreleased Niantic Multiplayer AR game

What’s so hard about world-scale AR?

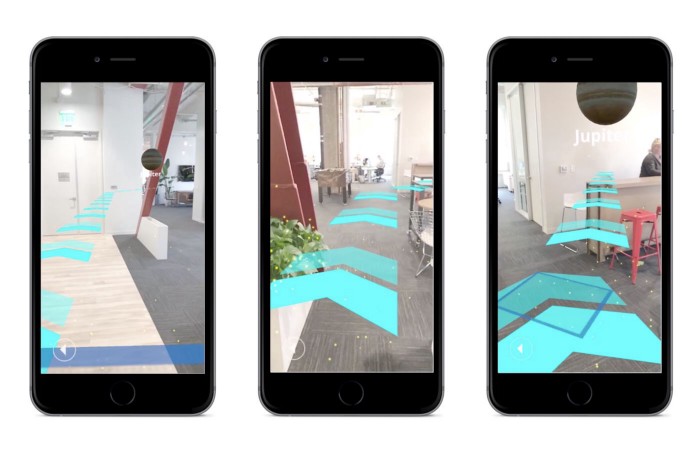

If you have a keen eye, I’m sure you’ve noticed that something in the last two frames seems a little off. Item placement and markers aren’t exactly aligned with what they should be - and that brings us to why AR is not where it fully could be yet: Digital and Physical World Alignment.

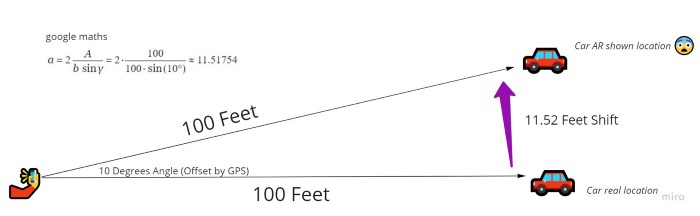

Let’s say I’m in Los Angeles, looking for where I parked my car. I have an app FindMyCar where when I pull up my camera, it puts a pointer to wherever I put down the bookmark while originally parking my car. The signal might not be great, so my GPS says I’m facing 10 degrees east of the direction I’m actually facing. Now in the app, the digital car marker in front of me will now be shifted by that 10 degrees, and it gets worse the farther away the object is.

So that’s not really helpful if I’m looking for where I parked my car. This is the same reason the other two apps seem off in the earlier photos as well. For some world-scale applications like Pokemon GO, placement can be off by a little bit since if Charizard is a little to the left or the right of that street it doesn’t affect your immersion. And immersion is really important, both to the user experience and the usefulness of the application.

If you want to see how Matthew Halberg attempted to build an outdoor navigation/text-based AR app in 2017 with a deeper explanation of the issues encountered, check out his old video here. The repo is deprecated by now so don’t bother trying to boot it up.

The second big issue in world-scale AR is localization - or in layman’s terms, detecting the world around you and sync up with your position in the digital world (think x and y positions). In our example above this would be the equivalent of being maybe 200 feet away from where I actually am, making the offset errors even worse. This also usually takes time, where you have to point your phone in different directions around you to help detect enough surfaces to spawn the digital world.

Luckily for us, years of smart engineers and product teams tackling this issue have led to quite a few interesting solutions to the problem.

Go big or go… small

As with many problems in life, business, and technology, people either try trying to tackle everything at once or isolate a piece away from the whole. This problem has been approached no differently.

Our first approach is the “let’s start build up this room 1:1” method, essentially creating a perfect clone of all the objects in this room, to create a seamless experience which is agnostic of geographic location. The second approach is the “world digital clone” method, which is focused on mapping digital experiences geographically to the real world.

Let’s dive deeper into both approaches:

Rebuild your room to experience your room

Let’s say I’m planning a scavenger hunt for new students to help get them more accustomed with the main campus building. If I have ten groups of students, I could hide ten sets of items or find a hundred hiding spots - or I could build a more fun and scalable experience in AR!

With a scavenger hunt indoors, we need more than a cloud anchor for an accurate experience. So what if we placed all the hints and hidden items in a digital model of the building first?

You could step into Blender and painstakingly model every room (and the furniture within it) by hand - or we could scan the objects into a point cloud/digital mesh. A few years ago, the team at 6D.ai scanned their office in 3 minutes using their phones to produce the following mesh:

Clearly it isn’t perfect, the more rough or detailed the edges of an object the harder it is to scan. Luckily, users will never see this scan - it is just being used for localization. Assuming we did this for the campus building, when students start the app inside any room the app will try to scan the surroundings and place itself within the digital model. With a combination of AI and depth-of-field sensors like LiDAR, the phone will quickly scan its surroundings and “recognize” where the student is standing. Then, wherever we placed the hints and items for the scavenger hunt will be spawned right in - without the messy scanned mesh.

The iPad Pro released in 2020 came with LiDAR and opened up the door for much faster plane detection and thus localization for AR applications.

Scanning everything within camera range in real-time.

So now we have solutions for building up a digital model as well as localization of a user within that model - but we need a platform within which to build the full scavenger hunt on (placing the items, hints, and various interactions). In comes Unity, a powerful game engine that has recently been focused on building a more diverse set of applications across machine learning and VR/AR. One feature that recently came out is called Unity AR Mars, built specifically for events in specific spaces like the scavenger hunt we’ve detailed out.

Here we see the digital model of the room and the placement of an interactive object on top. Only that object will be seen when the user opens the app.

But what if I wanted to create a scavenger hunt across the whole campus? It wouldn’t be viable to scan everything, including the outdoors - and some rooms might look really similar to one another. We need to incorporate geography into our application.

Better put a pin in it, fast

Have you ever used a snapchat filter for a specific event or location you were at? These are implemented essentially as a geographic zone of availability, otherwise known as “fences”.

However, drawing a fence doesn’t work for AR applications that need pinpoint accuracy - fencing the parking lot doesn’t help me find my car. In this case, we need to use cloud anchors instead.

Honestly, cloud anchors are still a bit clunky to set up as documentation and good examples are scattered. However, while old anchors could only last a day and now new ones can last up to one year! Essentially, you are scanning one area (say a statue), then attaching it to a geographic point (in latitude and longitude coordinates). For a more nuanced implementation, check out Google’s explainer here.

To help with placement of anchors, especially if algorithmically driven like in Pokemon GO, reasonable placement can be found by curating a dataset of appropriate locations. Niantic has done this with data from the apps and initiatives like wayfarer, ultimately leading to one of the “most comprehensive dataset of millions of the world’s most interesting and accessible places to play our games that are nearly all user generated (source)”.

Best of both “worlds”

While these approaches solve different issues, ultimately they are used in conjunction when building new AR apps like the scavenger hunt experience. Let’s take a quick look at two examples of this in implementation:

Pokemon GO has already been mentioned earlier, though this is largely a single player AR experience with shared game states. Niantic’s upcoming game Codename: Urban Legends does incorporate multiplayer sessions (likely developed from Neon referenced earlier).

trailer

Superworld is a real estate app on the blockchain (Ethereum) where each plot of land can have an AR experience built upon it. This means each plot will have its own cloud anchor, and everything from videos and posters to 3D animated models can be placed in the digital overlay around it by the plot owner.

A reasonable stack for building all this could be Unity as the game engine, Mapbox for geographic data/models, and AR Core for cloud anchor APIs, and 6D.ai (now part of Niantic) for scanning. Localization is largely hardware dependent (does your phone have depth-of-field abilities, can it handle 5G, etc).

Where do we go from here?

Ultimately, we’re looking to overlay the digital world accurately and quickly. That’s a really tough ask, but the rewards will be well worth the effort and the wait. With incoming future improvements in GPS, 5G, and phone hardware as well as AR engineering tooling, I absolutely can’t wait to experience and build world-scale AR applications in the future.